Diffusion Probabilistic Models

Physics undergraduate computational project. Universidad de los Andes (2023)

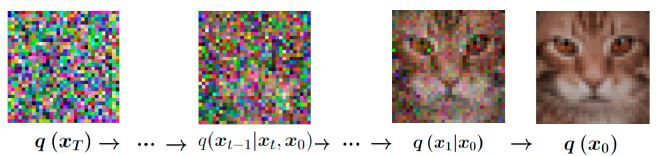

In the field of machine learning, probabilistic diffusion models have emerged as a prominent category of generative models. Their main objective is to learn a diffusion process that describes the probability distribution of a given dataset. This approach primarily consists of two key elements: the forward process and the backward process. These models have been successfully applied to various tasks such as denoising, inpainting, and image generation. They have demonstrated their versatility in generating real-world data, with notable examples including text-conditioned image generators like DALL-E and Stable Diffusion.

The essence of diffusion-based generative models can be traced back to statistical physics, where diffusion is modeled by describing the Brownian motion of particles through a physical system. Drawing inspiration from these concepts, Diffusion Generative Models adopt diffusion as a central process. By understanding how images diffuse in an abstract space, these models capture inherent patterns and generate synthetic data that reflects the underlying structure of real datasets. This connection to statistical physics provides a solid theoretical foundation, allowing individuals with a certain knowledge of physics to comprehend how this and other machine learning methods operate—a field that has seen significant development over the last decade.

Universidad de los Andes | Vigilada Mineducación

Reconocimiento como Universidad: Decreto 1297 del 30 de mayo de 1964.

Reconocimiento personería jurídica: Resolución 28 del 23 de febrero de 1949 Minjusticia.

Web design and programming © Gabriel Téllez